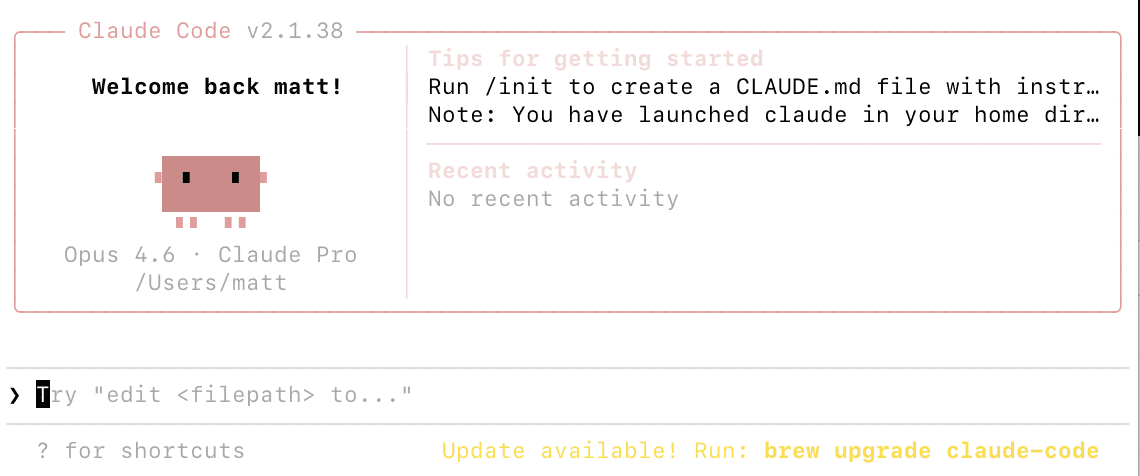

Matt

How Much Does Claude Code Cost? Pricing, Plans, and How to Save Money

Claude Code is available through Anthropic’s subscription plans starting at $20 per month, with higher tiers offering more usage and access to the most powerful models. You can also use pay-as-you-go API billing for more flexibility. This guide breaks down every option so you can choose the plan that matches how you actually work and avoid paying more than you need to.

If you are new to Claude Code, start with our overview of what Claude Code is before diving into pricing. Ready to get started? Head to our installation guide.

Anthropic offers several subscription tiers that include Claude Code. Each uses session-based usage limits that reset every five hours, so your capacity refreshes throughout the day rather than being a single monthly allowance.

The Free plan provides access to Claude.ai chat but very limited Claude Code functionality. For any real development work, a paid plan is necessary.

The Pro plan costs $20 per month billed monthly, or $17 per month with annual billing. It includes Claude Code access with moderate limits, roughly 10 to 40 prompts every five hours depending on complexity. Pro works for learning Claude Code, light development, and occasional coding sessions. It also includes file creation, code execution, Projects, and web search.

The Max plan comes in two tiers. Max 5x at $100 per month gives you five times the Pro usage. Max 20x at $200 per month gives twenty times Pro limits. Both include access to the Opus model, the most capable for complex engineering, and priority during high-demand periods. Max is for developers who rely on Claude Code as a primary daily tool.

API Pay-As-You-Go Pricing

If you prefer usage-based billing, use Claude Code with an Anthropic Console account and API key. You pay per token, with costs varying by model. Sonnet runs $3 per million input tokens and $15 per million output tokens. Opus costs significantly more but delivers stronger reasoning.

API pricing offers precise cost control and no hard usage caps, which matters for automation and scaling. However, costs can be variable and high with heavy use. One developer tracked eight months of heavy daily usage that would have cost over $15,000 on API pricing but only $800 on the Max plan. For most developers using Claude Code daily, the subscription wins on cost.

The API also offers batch processing at 50 percent off for non-urgent tasks and prompt caching that charges only 10 percent for cached content after the first request. System prompts and CLAUDE.md files are cached automatically.

Team and Enterprise Plans

For organizations, the Team plan starts at $25 per Standard seat per month with annual billing. Standard seats include collaboration features but not Claude Code. Premium seats at $150 per month add Claude Code access and early features, suited for technical teams. Enterprise plans include all Team features with additional governance and security. Contact Anthropic for Enterprise pricing.

Which Plan Should You Choose?

If you are starting out or use Claude Code a few times per week, Pro at $20 per month provides enough capacity for learning and light tasks. You will hit limits during sustained sessions, but for occasional use it works.

If Claude Code is a regular part of your workflow, Max 5x at $100 per month is the sweet spot. It handles daily professional work including complex debugging, feature implementation, and code review without constant limit interruptions.

For power users running Claude Code continuously across multiple projects or needing unrestricted Opus access, Max 20x at $200 per month offers the highest capacity. If your equivalent API costs would exceed $200 monthly, this tier saves significant money.

If your usage is unpredictable or you are integrating Claude Code into automated pipelines, API billing provides flexibility but requires monitoring. Start with a small amount of credits to understand your patterns before committing.

How to Track and Reduce Costs

The /cost command shows your session’s token usage and estimated cost, most useful for API users. For continuous visibility, configure the status line to show token usage at all times.

The most effective cost reduction strategies are clearing context between tasks with /clear so you stop paying for irrelevant history, using /compact to summarize long conversations, and choosing Sonnet over Opus for routine tasks. Sonnet handles most coding work well at much lower cost per token.

For teams, administrators set workspace spend limits in the Anthropic Console and view usage reports. The average API cost is roughly $6 per developer per day, with 90 percent of users staying below $12 daily. For configuration details, see our setup guide.

Understanding Usage Limits

Anthropic introduced weekly rate limits in August 2025 on top of the five-hour session resets. These primarily affect power users running Claude Code continuously and impact less than five percent of subscribers according to Anthropic. Pro and Max plans have different thresholds, with Max getting proportionally higher caps.

Claude Code sessions consume more capacity than regular chat because they include system instructions, full file contexts, long code outputs, and multiple tool calls per interaction. You will use your allocation faster than in the Claude.ai chat interface. Max subscribers can purchase additional usage beyond rate limits at standard API rates if needed.

For more on how tokens and context windows work technically, read how Claude Code works under the hood. To learn practical efficiency techniques, see our guide to using Claude Code effectively.

How Does Claude Code Work? Models, Architecture, and MCP Integration Explained

Claude Code is more than a chatbot that writes code. It is an agentic system that reads your files, executes terminal commands, manages git operations, connects to external services, and coordinates with other tools, all while maintaining awareness of your full codebase. Understanding how it works helps you use it more effectively, choose the right model, manage context, and extend it with external tools through MCP.

For a general overview of what Claude Code does, see what is Claude Code. To start using it right away, check out how to use Claude Code.

Claude Code runs on Anthropic’s Claude family of large language models. By default, it uses Claude Sonnet, which balances coding capability and speed well for most development tasks. Subscribers on Max plans also get access to Claude Opus, the most capable model, which excels at complex multi-step reasoning, architectural decisions, and debugging subtle issues.

Switch between models during a session with the /model command. Sonnet is faster and cheaper per token, ideal for routine tasks like writing tests, fixing lint errors, and generating boilerplate. Opus takes longer per response but produces higher quality results for challenging problems. Haiku is also available as the fastest, most economical option for simple tasks.

The specific model versions evolve as Anthropic ships updates. Claude Code automatically uses the latest versions, so you benefit from improvements without changing any configuration. The models have large context windows allowing Claude Code to process significant amounts of code and conversation history in a single session.

How Claude Code Processes Your Requests

When you send a message, Claude Code assembles a prompt that includes your system configuration from CLAUDE.md files, conversation history, referenced file contents, and your current request. This prompt goes to the Claude API, which returns a response containing text explanations, code suggestions, or tool use requests.

Tool use is what makes Claude Code agentic. The model can request to read files, write files, execute bash commands, search your codebase, and interact with MCP servers. Each tool use requires your approval by default unless you have pre-configured permissions. After a tool executes, the result feeds back to the model for the next step, creating an iterative loop.

This means a single request from you might result in Claude Code reading several files, running a test suite, identifying failures, editing code, running tests again, and reporting success, all as part of one interaction. The model decides which tools to use and in what order based on your request and the results at each step.

Context Windows and Token Management

Every session operates within a context window, the total amount of text the model considers at once. This includes system prompts, CLAUDE.md files, conversation history, file contents, and tool results. As sessions grow, context fills up and needs management.

Claude Code handles this through auto-compaction, which summarizes conversation history when approaching limits. You can trigger manual compaction with /compact, optionally specifying what to prioritize. The /clear command discards context entirely for switching to unrelated tasks.

Prompt caching reduces both cost and latency. System prompts and CLAUDE.md content that does not change between messages gets cached, so subsequent interactions process faster at reduced cost. This is why well-structured CLAUDE.md files matter for both quality and efficiency. For detailed cost management, see our pricing guide.

How Claude Code Reads and Edits Your Codebase

Claude Code uses agentic search to understand your codebase rather than requiring manual file selection. When you ask about your project, it searches file names, contents, and structure to find relevant code, working like an AI-powered grep. For file editing, changes are proposed as diffs you review before accepting. In the terminal, diffs display inline. In VS Code and other IDEs, they appear as visual overlays. See our guide on using Claude Code in VS Code for the IDE experience.

Claude Code can spawn multiple sub-agents for parallel work. A lead agent coordinates the task and assigns parts to specialized sub-agents, each with their own context window. This is powerful for large refactoring jobs spanning many files.

How to Add MCP Servers to Claude Code

The Model Context Protocol is an open standard that extends Claude Code beyond your local codebase. By adding MCP servers, you connect Claude Code to GitHub, Jira, Google Drive, Slack, databases, and any custom tooling you build.

Add servers with the “claude mcp add” command, specifying the server name, transport type, and connection details. Configure them at the project level in a .mcp.json file in your project root, or globally in your Claude Code settings. Project-level configuration is best for servers specific to one project. Global configuration suits general-purpose servers like Slack or Google Drive.

For servers requiring OAuth authentication, Claude Code supports pre-configured client credentials. Use the –client-id and –client-secret flags with “claude mcp add” for servers that do not support Dynamic Client Registration.

Common MCP Server Setups

GitHub is one of the most popular MCP servers. It lets Claude Code read issues, create branches, open and review pull requests, and manage repository workflows. Combined with code editing, this creates a complete loop from issue to merged PR without leaving your terminal.

Google Drive connects Claude Code to your documents, useful for implementing features based on specs stored outside your repo. Slack integration enables automated status updates after completing tasks. Database servers let Claude query your development databases to understand schemas, verify fixes, or generate migrations. Custom MCP servers can wrap any API or internal tool.

Managing MCP Permissions and Troubleshooting

When you add a server, Claude Code asks permission before using its tools. Pre-configure permissions with wildcard syntax in your settings. “mcp__servername__*” allows all tools from a server, while more granular patterns restrict specific tools. The /permissions command shows and manages your rules.

If an MCP server shows as “pending” and never connects, verify the server process is running and accessible from your terminal environment. WSL users should ensure the server is reachable from the Linux side. The /debug command helps troubleshoot connection issues. Servers loaded from .mcp.json can get stuck pending in non-interactive mode with -p, which restarting in interactive mode resolves.

Be selective about which servers you connect. Each adds tool definitions to your context, consuming tokens. Five servers with 10 tools each means 50 tool definitions on every message. Only connect servers you actively use. Use project-level .mcp.json so servers only load for relevant projects.

How to Access Claude Code

Claude Code is accessible through several surfaces. The terminal CLI runs on macOS, Linux, and Windows. IDE extensions work in VS Code, Cursor, Windsurf, and JetBrains. The web interface at claude.ai/code runs in your browser without local installation. Sessions move between surfaces with /teleport.

All access methods require a Claude subscription or Anthropic Console account. For pricing details, see our cost guide. To get set up, follow our installation guide and then the configuration walkthrough.

What Is Claude Code? A Complete Guide to Anthropic’s AI Coding Assistant

Claude Code is an AI-powered agentic coding tool built by Anthropic that lives directly in your terminal. Unlike traditional code assistants that autocomplete lines or answer questions in a chat window, Claude Code understands your entire codebase, executes commands, edits files across your project, manages git workflows, and handles complex development tasks through natural language conversation.

Whether you are a seasoned developer looking to speed up repetitive tasks or someone exploring AI-assisted development for the first time, Claude Code represents a fundamentally different approach to writing software. Instead of switching between a chatbot and your editor, you stay in your terminal and describe what you need done in plain English.

Claude Code at a Glance

At its core, Claude Code is a command-line interface that gives you conversational access to Anthropic’s most capable AI models. You launch it from your terminal, navigate to your project folder, and start describing what you want to accomplish. Claude Code then reads your files, understands the context, and takes action by editing code, running tests, creating new files, or executing shell commands on your behalf.

Claude Code is not limited to the terminal, though. It integrates with popular editors like VS Code, Cursor, Windsurf, and JetBrains IDEs through native extensions. You can even run it directly in your browser at claude.ai/code without any local setup, or hand off sessions between devices using the teleport feature. For a detailed walkthrough of editor integration, see our guide on how to use Claude Code in VS Code and Cursor.

Who Makes Claude Code?

Claude Code is developed and maintained by Anthropic, the AI safety company founded in 2021 by former OpenAI researchers including Dario and Daniela Amodei. Anthropic builds the Claude family of large language models that power the tool, and focuses on creating reliable, interpretable AI systems. Claude Code is one of their flagship developer products alongside the Claude API and Claude.ai chat interface.

The tool is open source on GitHub at github.com/anthropics/claude-code, meaning you can inspect the code, report issues, and follow development progress. Anthropic actively maintains it with new releases shipping regularly through automatic background updates.

What Is the Claude Code CLI?

When people say “Claude Code CLI,” they mean the core command-line interface that you interact with in your terminal. After installation, you type “claude” to launch an interactive session. From there, you type natural language requests, use slash commands like /init to generate a project configuration file or /clear to reset context, and reference specific files using the @ symbol.

The CLI supports several flags for different workflows. Running “claude -p” followed by a prompt gives you a single response without entering interactive mode, which is perfect for scripting and automation. The “claude -c” flag resumes your last conversation, and “claude –model” lets you switch between different AI models. There is no separate CLI package to download. The main installation gives you everything. For a deep dive into terminal workflows, see how to use Claude Code in the terminal.

What Makes Claude Code Different From Other AI Coding Tools?

Most AI coding assistants work as plugins inside your editor, offering suggestions as you type. Claude Code takes a different philosophy by following the Unix tradition of composable tools. You can pipe logs into it, run it inside CI/CD pipelines, chain it with other command-line utilities, or spawn multiple agents working on different parts of a task simultaneously.

Claude Code also has full agentic capabilities. It does not just suggest code and wait for you to copy and paste. It reads files, writes changes, executes terminal commands, runs your test suite, commits to git, and even opens pull requests. You review and approve each action, keeping you in control while Claude handles the heavy lifting.

The tool connects to external services through the Model Context Protocol, or MCP, which lets it read design docs from Google Drive, update tickets in Jira, pull data from Slack, or interact with any custom tooling you build. To understand how all of this works under the hood, read our deep dive on how Claude Code works, what models it uses, and how to extend it with MCP.

What Can You Do With Claude Code?

On the routine side, Claude Code excels at writing tests for untested code, fixing lint errors across a project, resolving merge conflicts, updating dependencies, and generating release notes. These are the tedious chores that eat up developer time, and Claude Code automates them through simple natural language prompts.

For more complex work, Claude Code implements entire features spanning multiple files, refactors large codebases, debugs tricky issues by reading error logs and tracing through code paths, and explains unfamiliar codebases to help you onboard faster. It supports multi-agent workflows where a lead agent coordinates several sub-agents working on different components simultaneously.

How Good Is Claude Code?

Claude Code’s quality depends on which model you use and how well you structure your requests. With Opus, the most powerful model, it handles complex architectural reasoning, multi-file refactoring, and subtle debugging with strong results. It consistently ranks among the top AI coding tools for understanding large codebases and maintaining context across extended development sessions.

For routine tasks like writing tests, implementing features from clear specifications, fixing bugs, and managing git workflows, Claude Code is highly effective with any model tier. Where it is less strong is in areas requiring domain knowledge about proprietary internal frameworks or very recently released libraries. The CLAUDE.md configuration file helps mitigate this by giving Claude Code explicit context about your project’s specifics.

How Much Does Claude Code Cost?

Claude Code is included with Anthropic subscription tiers. The Pro plan at $20 per month gives you access with moderate usage limits. Max plans at $100 and $200 per month offer significantly higher usage caps and access to Opus. You can also use pay-as-you-go API billing through the Anthropic Console. For a full breakdown of every plan, usage limits, and cost optimization tips, check out our detailed Claude Code pricing guide.

Getting Started

Setting up Claude Code takes just a few minutes on macOS, Linux, or Windows. Anthropic provides native installers that do not require Node.js. After installing, you authenticate with your Anthropic account and navigate to your project directory to start your first session.

Follow our step-by-step installation guide covering Mac, Windows, and Linux. Once installed, our guide on setting up, configuring, and keeping Claude Code updated walks you through authentication, CLAUDE.md files, and your first productive session. When you are ready to dive in, see our complete practical guide to using Claude Code.

How to Install Claude Code on Mac, Windows, and Linux

Installing Claude Code takes just a few minutes regardless of your operating system. Anthropic now offers native installers as the recommended method, and the entire process goes from download to first coding session in about five minutes. This guide covers every installation method for every platform so you can get Claude Code running quickly.

If you are new to the tool and want to understand what it does before installing, start with our overview of what Claude Code is and what it can do.

Claude Code runs on macOS 10.15 or later, most Linux distributions including Ubuntu 20.04 and Debian 10, and Windows 10 or later. You need at least 4GB of RAM and an active internet connection. The native installer does not require Node.js, which simplifies setup considerably compared to older npm-based methods.

You also need either a Claude Pro or Max subscription, or an Anthropic Console account with API credits. Claude Code authenticates through your browser on first launch, so make sure you have an active account ready. Not sure which plan to pick? See our Claude Code pricing breakdown.

Installing Claude Code on Mac

Open Terminal on your Mac. You can find it in Applications, then Utilities, or press Command+Space and type Terminal. Run the native install script from Anthropic’s official source. The installer downloads the correct binary for your Mac architecture, whether Intel or Apple Silicon, and configures your PATH automatically.

After the script finishes, verify the installation by typing “claude –version” in your terminal. You should see a version number without errors. The native macOS binary is signed by Anthropic PBC and notarized by Apple, so you should not see Gatekeeper warnings. If your Mac blocks it, go to System Preferences, then Security and Privacy, and allow the installer.

Mac users who prefer Homebrew can install with the brew install command instead. The important difference is that Homebrew installations do not auto-update. You need to manually run “brew upgrade claude-code” periodically. For most Mac users, the native installer is the better choice because of its automatic background updates.

Installing Claude Code on Windows

Windows users have two solid options: native installation or WSL.

For native installation, use WinGet from PowerShell or Command Prompt by running “winget install Anthropic.ClaudeCode” and following the prompts. After installation, verify with “claude –version” in Git Bash, PowerShell, or Command Prompt. Native Windows requires Git Bash for the full Claude Code experience. If you do not have it, install Git for Windows from the official Git website, which includes Git Bash. Windows Terminal is recommended as your terminal emulator. Note that WinGet installations do not auto-update, so run “winget upgrade Anthropic.ClaudeCode” periodically.

For WSL installation, open PowerShell as Administrator and run “wsl –install -d Ubuntu” to set up WSL with Ubuntu. Reboot when prompted. After rebooting, open your Ubuntu terminal, update packages with “sudo apt update && sudo apt upgrade -y,” and install Git with “sudo apt install git -y.” Then run the Claude Code install script from your Ubuntu terminal, just as you would on native Linux. WSL 2 is recommended over WSL 1 because it supports Bash tool sandboxing for enhanced security.

For WSL users who also use VS Code, connect VS Code to your WSL environment by running “code .” from your WSL terminal. This lets you use the Claude Code extension while Claude Code runs in WSL. Read more in our guide on using Claude Code in VS Code and Cursor.

Installing Claude Code on Linux

On Linux, open your terminal and run the native install script. The process is identical to macOS. The installer detects your distribution and architecture, downloads the correct binary, and sets up your PATH. Verify with “claude –version” after installation. Native installations on Linux auto-update in the background just like on Mac.

The npm Method (Deprecated)

Older guides may reference installing Claude Code globally through npm. While this still works, Anthropic has deprecated it in favor of native installers. If you have an existing npm installation, migrate by running “claude install” from your terminal, which switches you to the native binary while preserving your settings and project configurations.

If you do use npm, ensure you have Node.js version 18 or higher. Never use sudo with npm install as this causes permission issues. Instead, configure a user-level npm directory by running “mkdir ~/.npm-global” and updating your npm prefix and PATH accordingly.

Installing the CLI Specifically

There is no separate Claude Code CLI package. When people search for “how to install Claude Code CLI,” they mean the main tool itself. Every installation method above installs the full CLI, which includes both the interactive chat mode and the non-interactive pipeline mode for scripting and automation.

How to Download Claude Code

If you prefer a downloadable binary rather than a command-line installer, the GitHub releases page at github.com/anthropics/claude-code has binaries for each platform. You can also access Claude Code in your browser at claude.ai/code with no download needed, which is useful for trying it before committing to a local install.

IDE extensions are available separately in the VS Code marketplace and JetBrains marketplace. These connect to the Claude Code backend on your machine and are covered in our VS Code and Cursor integration guide.

Verifying and Troubleshooting Your Installation

Run “claude –version” to confirm Claude Code is installed correctly. If you see “command not found,” your PATH is likely not configured. Close and reopen your terminal to refresh, then try again. On macOS, check that your .zshrc file includes the installation directory in PATH. On Linux, check .bashrc. On Windows, ensure Git Bash or your preferred terminal can find the binary.

Anthropic provides a diagnostic command, “claude doctor,” that checks your installation type, version, authentication status, and configuration for common issues. Run this whenever something seems off.

If troubleshooting does not resolve the issue, a clean reinstall usually works. Remove the binary and any configuration files, then follow the installation steps again from scratch.

Next Steps

With Claude Code installed, head to our guide on setting up Claude Code for authentication, CLAUDE.md configuration, updates, and your first session. When you are ready to start working, our complete usage guide covers practical workflows and tips for getting the best results.

How to Set Up Claude Code: Configuration, Updates, and Your First Session

Installing Claude Code is only step one. Proper setup, including authentication, CLAUDE.md configuration, permissions, and understanding the update cycle, is what turns a fresh install into a productive daily tool. This guide covers the full lifecycle from first launch to keeping Claude Code current, checking your usage, and even uninstalling cleanly if you ever need to.

If you have not installed Claude Code yet, start with our installation guide for Mac, Windows, and Linux. For a general overview, see what Claude Code is.

Navigate to your project directory in the terminal and type “claude” to launch your first session. On first launch, Claude Code opens a browser window asking you to authenticate. Sign in with your Claude Pro, Max, or Anthropic Console account. After authentication, you return to your terminal and Claude Code is ready.

If the browser does not open automatically, copy the URL displayed in your terminal and paste it into your browser. This is common on WSL and headless server environments. Once authenticated, credentials are stored locally so future launches start immediately.

For API key authentication instead of browser-based OAuth, set the ANTHROPIC_API_KEY environment variable in your shell. Add the export line to your shell configuration file (.zshrc on macOS, .bashrc on Linux) to persist it across sessions. Get your API key from the Anthropic Console at console.anthropic.com.

Setting Up CLAUDE.md

CLAUDE.md is the most important configuration file for getting good results. It is a Markdown file that Claude Code reads at the start of every session, containing your coding standards, architecture decisions, preferred libraries, common commands, and any instructions you want Claude to follow consistently.

Run /init in a Claude Code session to generate a CLAUDE.md with recommended defaults. Claude Code analyzes your project structure and creates a starting configuration you can customize. Alternatively, create the file manually in your project root.

CLAUDE.md works in a hierarchy. A global file at ~/.claude/CLAUDE.md applies to all projects and is a good place for personal coding preferences. A project-level file at ./CLAUDE.md in your repo root contains project-specific instructions. You can add CLAUDE.md files in subdirectories for component-specific guidance. More specific settings override more general ones.

Include your preferred languages and frameworks, naming conventions, testing expectations, directory structure explanations, deployment procedures, and review checklists. The more context you provide, the better Claude Code aligns with your workflow.

Configuring Permissions and Security

Claude Code asks for permission before executing impactful actions like writing files and running shell commands. You can pre-configure allowed tools in your settings.json file to avoid repeated prompts. For example, allow read operations and git commands while requiring approval for file writes and arbitrary bash commands.

Settings.json also lets you configure model preferences, maximum token limits, and hooks that run shell commands before or after Claude Code actions. You might set up a hook that auto-formats code after every file edit, or runs your linter before every commit. WSL 2 installations support sandboxing for enhanced security, isolating command execution in a controlled environment.

Setting Up Custom Slash Commands

Create custom slash commands to package repeatable workflows your team can share. Store definitions in .claude/commands/ for project-specific commands or ~/.claude/commands/ for personal commands across all projects. For example, a /review-pr command that runs a standard code review workflow, or a /deploy-staging command for your deployment checklist. These save time and tokens by packaging multi-step instructions into a single shortcut.

How to Check Claude Code Usage

Use the /cost command during a session to see your current token usage, duration, and estimated cost. For API users paying per token, this is essential for budget tracking. For subscription users, /cost shows consumption but does not directly relate to billing since your cost is fixed monthly.

For teams, administrators can view detailed cost and usage reporting in the Anthropic Console. When you first authenticate with a Console account, a workspace called “Claude Code” is automatically created for centralized tracking. You can set spend limits on this workspace to prevent unexpected costs.

The status line can be configured to display token usage continuously, so you always know how much context you are consuming. This helps you decide when to use /compact to reduce context size or /clear to start fresh. For a full cost analysis and plan comparison, see our Claude Code pricing guide.

How to Update Claude Code

If you installed using the native installer, updates happen automatically in the background. Claude Code checks for updates on startup and periodically while running, downloads, and installs them without any action from you. You see a notification when updates are installed.

For Homebrew installations on macOS, auto-update is not supported. Run “brew upgrade claude-code” periodically. For WinGet on Windows, run “winget upgrade Anthropic.ClaudeCode” to update. Setting a recurring reminder to check weekly or biweekly is good practice for these methods.

To force an immediate update check on any installation type, run “claude update.” Check your current version with “claude –version” and compare against the latest release on the Claude Code GitHub repository or npm page.

How to Upgrade Your Claude Code Plan

Upgrading from Pro to Max or between Max tiers happens through your account settings on claude.ai. The change takes effect immediately with new usage limits applying to your current session. No reinstallation or reconfiguration is needed since Claude Code automatically detects your subscription level. For help choosing a plan, see our pricing breakdown.

Running Claude Doctor for Diagnostics

If something seems wrong, run “claude doctor” to check your installation. It verifies installation type, version, configuration, authentication status, and connectivity. The output identifies common issues like outdated versions, missing PATH entries, or configuration errors, and suggests fixes. This is the first thing to try when Claude Code behaves unexpectedly.

How to Uninstall Claude Code

If you need to remove Claude Code for a clean reinstall or to switch installation methods, the process depends on how you installed it.

For native installations on macOS and Linux, remove the Claude Code binary and the version data directory. For WinGet installations on Windows, use the WinGet uninstall command or standard Windows app removal. For WSL, uninstall from within your Linux environment using the Linux removal process. For Homebrew, use the brew uninstall command. For deprecated npm installations, use npm uninstall with the global flag.

Uninstalling removes the binary but not your configuration files. To do a complete clean removal, also delete the ~/.claude directory and ~/.claude.json file, which contain your settings, allowed tool configurations, MCP server configurations, and session history. On Windows, these are in your user profile directory. Project-specific settings live in .claude/ and .mcp.json within each project folder.

If you have IDE extensions installed in VS Code, Cursor, or JetBrains, uninstall those separately through each editor’s extension manager.

For most troubleshooting scenarios, running “claude doctor” first is better than jumping straight to a full uninstall and reinstall. But when you do need a fresh start, removing everything including configuration files and then following the installation guide again is the most reliable path.

Next Steps

With Claude Code set up and configured, you are ready to start building. Our complete guide to using Claude Code covers practical workflows, essential commands, and tips for effective daily use. For terminal-specific workflows, see how to use Claude Code in the terminal. To understand the technical details of how it all works, read how Claude Code works under the hood.

How to Use Claude Code in the Terminal: Commands, Shortcuts, and Automation

The terminal is where Claude Code is most powerful. Running directly in your command line, it reads your project files, executes commands, and modifies your codebase through natural language conversation. This guide covers everything specific to the terminal experience, from launching and exiting sessions to pipeline automation and multi-agent workflows.

If you have not installed Claude Code yet, follow our installation guide first. For a broader overview of all Claude Code capabilities beyond the terminal, see the complete usage guide.

Navigate to your project directory and type “claude” to launch an interactive session. Claude Code reads your project structure, loads any CLAUDE.md configuration files, and connects to configured MCP servers. You will see a prompt indicator where you can start typing natural language requests.

If this is your first time, Claude Code opens a browser window for authentication. Sign in with your account and return to the terminal. Future launches skip this step. For detailed authentication options, see our setup and configuration guide.

To start with a specific prompt without entering interactive mode, use “claude -p” followed by your prompt in quotes. This is useful for quick one-off tasks and scripting. To resume your most recent conversation, use “claude -c” which picks up exactly where you left off.

How to Run Claude Code in the Terminal

Once your session is active, type requests in plain English at the prompt. Claude Code might read files to understand context, propose edits shown as diffs, or suggest terminal commands to execute. You approve or reject each action.

Reference specific files using @ followed by the path. “@package.json explain the dependencies” focuses Claude on that file. Reference directories like “@src/components/ what does each component do” to scope analysis. If you do not know the exact path, Claude Code can search for it.

Run shell commands directly by prefixing with an exclamation mark. “!git status” shows your git status, “!npm test” runs tests, “!ls -la” lists files. This executes the command directly rather than going through Claude’s conversational interface, which is faster and uses fewer tokens.

Essential Terminal Commands and Shortcuts

The /clear command resets conversation context. Use it when switching tasks so you are not paying for stale context. The /compact command summarizes your conversation to reduce context size while preserving important details. You can customize what to keep with “/compact Focus on the API changes we discussed.” The /cost command shows token usage and estimated cost.

The /model command switches AI models during your session. “/model sonnet” for faster, cheaper responses. “/model opus” for the most capable reasoning. The /init command generates a CLAUDE.md for your project. The /help command lists everything available including custom commands.

Tab completion works for file paths and slash commands. Command history with the up arrow recalls previous prompts. Word deletion with Option-Delete and word navigation with Option-Arrow work on most systems.

How to Exit Claude Code

Type /exit at the prompt or press Ctrl+C to end a session. To pause and return later, use /exit and then “claude -c” next time to resume. Ctrl+D sends an end-of-input signal that also closes the session.

If Claude Code is in the middle of a long operation, press Ctrl+C once to cancel the current action without exiting. Press Ctrl+C again or type /exit to close entirely. Conversation history is preserved automatically for later resumption.

Running Claude Code for Automation and Pipelines

The non-interactive “claude -p” mode is designed for scripting and CI/CD. Pipe input to Claude Code and capture its output, composing it with other Unix tools. Practical examples include monitoring logs with “tail -f app.log | claude -p ‘alert me if there are anomalies'” and reviewing changed files with “git diff main –name-only | claude -p ‘review these files for security issues.'”

For CI/CD integration, Claude Code can review pull requests, generate changelogs, check code quality, or automate anything that benefits from AI understanding of your codebase. The pipeline mode outputs to stdout, making it easy to capture results in automation scripts.

Working With Multiple Sessions and Agents

Run multiple Claude Code sessions simultaneously in different terminal windows or tabs. Each maintains its own conversation context and works on different tasks. The /teleport command moves a session between surfaces, such as from your terminal to the Claude desktop app for visual diff review.

Claude Code supports spawning sub-agents for parallel work. A lead agent coordinates multiple instances, each with its own context window, working on different parts of a large task simultaneously. This is powerful for big refactoring jobs or feature implementations spanning many files.

Terminal Tips for Better Results

Keep your terminal window at least 100 columns wide. Claude Code renders diffs and code snippets that look best with this width, and narrow windows can cause rendering issues. Use a modern terminal emulator: Terminal or iTerm2 on macOS, Windows Terminal on Windows, or any modern terminal on Linux. The terminal should support ANSI colors and Unicode for proper diff and status rendering.

For extended sessions, monitor context with /cost and use /compact when it grows large. Long conversations accumulate tokens and become more expensive per message. Starting fresh with /clear when switching tasks is the most cost-effective habit. For more on managing costs, see our pricing guide.

To understand what happens behind the scenes when you interact with Claude Code, and how to extend it with external tools through MCP, read how Claude Code works.

How to Use Claude Code: A Practical Guide for Developers

Claude Code is a powerful AI coding assistant, but getting the most out of it requires understanding its workflows, commands, and best practices. This guide covers everything from basic interactions to advanced techniques that will help you use Claude Code effectively in your daily development work.

If you have not installed Claude Code yet, start with our installation guide for Mac, Windows, and Linux. For background on what the tool does, see what is Claude Code.

Open your terminal, navigate to your project directory, and type “claude” to launch an interactive session. Claude Code reads your project files and understands the structure of your codebase from the start. You type natural language requests at the prompt, and Claude Code responds by reading files, proposing changes, and executing commands with your approval.

A good first interaction is asking Claude Code to explain your codebase. Type something like “give me an overview of this project’s architecture” and it analyzes your files and provides a summary. This helps you see what Claude Code understands and gives you a baseline for more complex requests.

Essential Commands You Should Know

Claude Code includes built-in slash commands that control its behavior. The /init command generates a CLAUDE.md configuration file for your project. The /clear command resets your conversation context, essential when switching between unrelated tasks to avoid wasting tokens on stale information. The /cost command shows your current session’s token usage. The /compact command summarizes your conversation history to free up context window space while preserving important details.

The /model command switches between AI models mid-session. Sonnet is the default and handles most coding tasks efficiently at lower cost. Opus provides the most capable reasoning for complex decisions. Use /help to see all available commands including custom ones you have created.

Use the @ symbol to reference specific files or directories. For example, “@src/api/users.js explain the authentication flow” directs Claude Code to focus on that file. Execute shell commands within a session by prefixing them with an exclamation mark. “!npm test” runs your test suite without leaving the conversation and costs fewer tokens than asking Claude to do it conversationally. For a deep dive into terminal-specific techniques, see how to use Claude Code in the terminal.

Common Development Workflows

For bug fixes, describe the problem and let Claude Code investigate. It reads error messages, traces through your code, identifies the root cause, and proposes a fix as a diff you review and approve. For feature implementation, describe what you want built and Claude Code creates or modifies the necessary files.

For code reviews, ask Claude Code to review recent changes or a specific file. It checks for security vulnerabilities, performance problems, and maintainability concerns. For refactoring, describe the transformation you want and Claude handles multi-file changes.

Git workflows are another strong use case. Claude Code stages changes, writes commit messages, creates branches, and opens pull requests when connected to GitHub through MCP. Tell it “commit these changes with a descriptive message” and it handles the operations. Learn how to connect these external tools in our guide on how Claude Code works and how to extend it with MCP.

How to Use Claude Code Effectively

Be specific in your requests. Instead of “fix this code,” say “the login endpoint returns a 500 error when the email field is empty. Find and fix the missing validation.” The more context you provide upfront, the better the results.

Use CLAUDE.md files to give Claude Code persistent context about your project. Include coding standards, preferred libraries, architecture decisions, and review checklists. Claude Code reads these at the start of every session, so you do not need to repeat this information in every prompt. For details on setting this up, see our setup and configuration guide.

Clear context between unrelated tasks using /clear. Stale context wastes tokens on every subsequent message. Use /rename before clearing so you can find the session later, then /resume to return when needed.

Switch models based on task complexity. Use Sonnet for writing tests, fixing lint errors, and generating boilerplate. Switch to Opus for complex multi-step reasoning, architectural decisions, and debugging subtle issues.

Using Claude Code in Your IDE

The terminal is the primary interface, but Claude Code also integrates with VS Code, Cursor, Windsurf, and JetBrains IDEs. These extensions show proposed changes as visual diffs, let you share selected code as context, and provide a sidebar panel for interaction without leaving your editor. For complete setup instructions and IDE-specific tips, see our dedicated guide on using Claude Code in VS Code and Cursor.

Non-Interactive Mode and Automation

Claude Code supports non-interactive mode for scripting and CI/CD integration. Run “claude -p” followed by a quoted prompt to get a single response piped to stdout. This enables workflows like monitoring logs with “tail -f app.log | claude -p ‘alert me if you see anomalies'” or reviewing changes with “git diff main –name-only | claude -p ‘review these files for security issues.'”

You can also automate translations, generate documentation, run bulk operations across files, and integrate Claude Code into any automated pipeline. This composability is what makes it fundamentally different from chat-based AI assistants.

Managing Costs While Using Claude Code

Claude Code consumes tokens for each interaction, with costs varying by codebase size, query complexity, and conversation length. Use /cost to monitor usage and /compact to reduce context when it grows large. Clearing context between tasks and choosing Sonnet over Opus for routine work are the most effective ways to keep costs down.

For a full breakdown of subscription plans, API pricing, and cost optimization strategies, read our Claude Code pricing guide. To understand the technical underpinnings of token usage and context windows, see how Claude Code works.

Claude Code Security: Everything You Need to Know Before Getting Started

A practical guide to how Claude Code works, who can use it, how to access it securely, and what you should know about permissions, data handling, and safe usage.

IN THIS GUIDE

1. What Is Claude Code?

2. Who Can Use Claude Code?

3. How to Access & Install Claude Code

4. Understanding the Security Model

5. Permissions & What Claude Code Can Do

6. Data Privacy & How Your Code Is Handled

7. Security Best Practices

8. Frequently Asked Questions

1. WHAT IS CLAUDE CODE?

Claude Code is Anthropic’s command-line tool for agentic coding. Unlike the web-based Claude.ai chat interface, Claude Code runs directly in your terminal and can interact with your local codebase — reading files, writing code, running commands, and managing project tasks on your behalf.

Think of it as having an AI pair programmer that sits inside your development environment. You give it natural language instructions like “refactor the authentication module to use JWT tokens” or “find and fix the bug causing the test suite to fail,” and Claude Code handles the implementation, working across your files and project structure.

This is a fundamentally different interaction model from chatting in a browser. Because Claude Code operates locally on your machine with access to your filesystem and terminal, understanding its security model is essential before you start using it.

Key capabilities at a glance:

Terminal-Native — Runs directly in your command line, not in a browser window.

File System Access — Reads, writes, and modifies files in your project directory.

Command Execution — Can run shell commands, scripts, and development tools.

Permission Controls — Configurable approval system for different action types.

2. WHO CAN USE CLAUDE CODE?

Claude Code is designed for software developers, engineers, and technical teams who work with codebases regularly. It’s available to users on several Anthropic plan tiers, though availability and usage limits may vary.

Plan Availability

Claude Code is accessible to users with a Claude Pro, Team, or Enterprise subscription, and it’s also available to API users. The exact feature set and rate limits can differ depending on your plan. Since Anthropic frequently updates plan details and pricing, it’s best to check the Claude support page (https://support.claude.com) or the official documentation (https://docs.claude.com) for the most current information on what’s included in each tier.

Technical Requirements

Claude Code requires Node.js to be installed on your system. It’s distributed as an npm package, so you’ll need a working Node.js environment. It supports macOS and Linux as primary platforms. For Windows users, Claude Code works through Windows Subsystem for Linux (WSL).

[Note: You don’t need to be an expert in AI or prompt engineering to use Claude Code effectively. If you’re comfortable with a terminal and familiar with your project structure, you can be productive quickly. That said, understanding the security implications of giving an AI tool access to your filesystem is important — which is exactly what the rest of this article covers.]

3. HOW TO ACCESS & INSTALL CLAUDE CODE

Getting started with Claude Code is a straightforward process. Here’s a walkthrough of the core steps.

Step 1: Ensure Node.js Is Installed

Claude Code is an npm package, so you’ll need Node.js on your machine. If you don’t have it, download it from nodejs.org or use a version manager like nvm.

Step 2: Install via npm

Install Claude Code globally using npm. The package is @anthropic-ai/claude-code and can be found on npmjs.com (https://www.npmjs.com/package/@anthropic-ai/claude-code).

Step 3: Authenticate

After installation, you’ll need to authenticate with your Anthropic account. Claude Code will guide you through the authentication flow in your terminal.

Step 4: Navigate to Your Project & Start

Navigate to the root of the project you want to work in, then launch Claude Code. It will begin by understanding your project’s structure and context.

Terminal commands:

# Install Claude Code globally

npm install -g @anthropic-ai/claude-code

# Navigate to your project

cd /path/to/your/project

# Launch Claude Code

claude

[Important: Always verify the exact installation steps in the official Claude Code documentation (https://docs.claude.com/en/docs/claude-code/overview), as the process or package name may evolve over time.]

4. UNDERSTANDING THE SECURITY MODEL

Because Claude Code operates directly on your machine — reading your code, running commands, and writing files — Anthropic has built a security model around user consent and explicit approval. Here’s how to think about it.

The Approval System

Claude Code uses a permission system that requires your approval before it takes potentially impactful actions. When Claude Code wants to execute a shell command, write to a file, or perform other actions that modify your system, it presents the action for your review and waits for you to approve it before proceeding.

This means Claude Code won’t silently rewrite your files or run arbitrary commands. You stay in the loop and maintain control over what actually happens on your machine.

Trust Levels & Configuration

Claude Code supports configurable permission levels that let you decide how much autonomy to grant. You can keep things locked down with manual approval for every action, or allow certain categories of operations (like file reads) to proceed automatically. The documentation provides details on configuring these permission modes to match your security preferences and workflow.

[Key Principle: Claude Code follows a “human in the loop” design philosophy. The default behavior is to ask before acting. You choose how much latitude to give it, not the other way around.]

5. PERMISSIONS & WHAT CLAUDE CODE CAN DO

It’s worth being explicit about the types of actions Claude Code is capable of, so you can make informed decisions about when and how to use it.

What Claude Code Can Do

Read files — Claude Code can read any file within your project directory to understand context, review code, or analyze your project structure.

Write and edit files — It can create new files, modify existing ones, refactor code, and make edits across multiple files in a single operation.

Execute shell commands — Claude Code can run terminal commands like git, npm, build scripts, test suites, and other CLI tools available in your environment.

Interact with MCP servers — Claude Code supports the Model Context Protocol (MCP), which allows it to connect to external tools and services through standardized integrations. This extends its capabilities beyond your local filesystem.

What to Be Aware Of

Because Claude Code can run shell commands, it has the same level of access to your system as your terminal session does. This means it could theoretically install packages, modify system files (if you have permission), make network requests, or interact with services your machine has access to.

[Security Consideration: Always review the commands Claude Code proposes before approving them, especially commands that install packages, modify system-level configurations, or interact with production environments. Treat the approval prompt the same way you’d treat pasting a command from the internet into your terminal — with informed caution.]

6. DATA PRIVACY & HOW YOUR CODE IS HANDLED

One of the most common concerns developers have about AI coding tools is: “What happens to my code?” It’s a fair question, especially if you’re working on proprietary or sensitive projects.

How Conversations Are Processed

When you use Claude Code, your prompts and the relevant code context are sent to Anthropic’s API for processing. This is how Claude generates its responses and proposed actions. The data in transit is encrypted, and Anthropic has published usage policies that outline how data is handled.

Data Retention & Training

Anthropic’s data policies apply to Claude Code usage. The specifics around whether your inputs are used for model training, how long data is retained, and what protections are in place depend on your plan type (particularly for Enterprise and API users, who typically have stricter data handling agreements).

[Recommended: Review Anthropic’s current privacy policy and terms of service (https://www.anthropic.com) for the most accurate, up-to-date information on data handling. Enterprise and Team plans generally offer additional data protections and commitments. If you’re working with highly sensitive code, this is worth investigating before adoption.]

Working with Sensitive Projects

If you’re working on projects with strict confidentiality requirements — government contracts, financial systems, healthcare data — talk to your security team before adopting Claude Code. Consider what code and context is being sent externally, whether your compliance requirements allow third-party AI tool usage, and what data handling agreements your plan provides.

7. SECURITY BEST PRACTICES

Here are practical recommendations for using Claude Code securely in your day-to-day workflow.

Start with Restrictive Permissions

When you first start using Claude Code, keep the approval requirements tight. Manually review every file write and shell command until you’re comfortable with the types of actions it proposes. You can loosen permissions over time as you build confidence in the tool’s behavior within your specific workflow.

Be Mindful of Environment Variables & Secrets

Claude Code can read files in your project directory, including .env files, configuration files with API keys, and other sensitive data. Make sure your .gitignore practices are solid and consider whether files containing secrets should be accessible in the directories where you run Claude Code.

Review Before Approving

This one is simple but critical: actually read the commands and file changes Claude Code proposes. Don’t rubber-stamp approvals. The tool is powerful and generally makes good suggestions, but every automated system can produce unexpected results — especially with complex or ambiguous instructions.

Use Version Control

Always work in a Git repository (or equivalent version control system) when using Claude Code. This gives you a safety net: if Claude Code makes changes you don’t like, you can easily review diffs and revert. Commit your work before starting a Claude Code session so you have a clean baseline to compare against.

Scope Your Sessions

Rather than giving Claude Code access to your entire home directory, navigate to the specific project you’re working on. This limits the scope of files it can read and reduces the surface area of potential unintended changes.

Keep It Updated

Like any security-relevant tool, keep Claude Code updated to the latest version. Anthropic regularly releases updates that may include security improvements, bug fixes, and new permission controls.

8. FREQUENTLY ASKED QUESTIONS

Q: Is Claude Code free to use?

A: Claude Code is included with certain Anthropic plans (Pro, Team, Enterprise) and is also available to API users. The exact pricing, rate limits, and usage caps depend on your plan. Check the Claude support page (https://support.claude.com) for current pricing details.

Q: Can Claude Code access the internet or make network requests?

A: Claude Code can execute shell commands, which means it could potentially make network requests if it runs commands like curl, npm install, or similar tools. This is why the approval system is important — you’ll see the command before it runs and can decline anything you’re not comfortable with.

Q: Does Claude Code send my entire codebase to Anthropic’s servers?

A: Claude Code sends relevant context to the API as needed to respond to your prompts — not your entire codebase at once. However, over the course of a session it may read and send multiple files as it builds understanding of your project. If you’re working with sensitive code, review Anthropic’s data handling policies and consider whether an Enterprise plan’s data protections align with your requirements.

Q: Can I use Claude Code on Windows?

A: Claude Code supports macOS and Linux natively. For Windows, you can use it through Windows Subsystem for Linux (WSL). Native Windows support is not currently available, though this may change — check the official docs for the latest platform support information.

Q: What is MCP, and should I be concerned about it?

A: MCP (Model Context Protocol) is a standardized way for Claude Code to connect with external tools and services — things like databases, APIs, or development platforms. It extends what Claude Code can do beyond your local files. MCP server integrations are configurable, and you control which ones are active. Only enable MCP connections to tools and services you trust and need for your workflow.

Q: Can Claude Code accidentally delete my files or break my project?

A: Like any tool that writes files and runs commands, there’s always some risk of unintended changes. This is why the approval system exists and why version control is essential. If you’re using Git and reviewing proposed changes before approving them, you can always revert anything problematic. Start with restrictive permissions and work in branches for extra safety.

Q: Is Claude Code suitable for enterprise and regulated environments?

A: Anthropic offers Enterprise plans with additional security features, data handling commitments, and compliance support. If you’re in a regulated industry, engage with Anthropic’s enterprise sales team to understand what protections are available. Visit anthropic.com/contact-sales for more information.

Q: How does Claude Code compare to GitHub Copilot or Cursor?

A: While all three are AI coding tools, they differ in form factor. GitHub Copilot is primarily an IDE extension that offers inline code completions. Cursor is a full AI-native code editor. Claude Code is a command-line tool that operates as an agentic assistant — it can execute multi-step tasks, run commands, and manage file operations autonomously (with your approval). The right choice depends on your preferred workflow and how much autonomy you want your AI assistant to have.

Q: Where can I find the most up-to-date Claude Code documentation?

A: The official Claude Code documentation is maintained at docs.claude.com/en/docs/claude-code/overview. For general account and billing questions, visit support.claude.com. The npm package page at npmjs.com (https://www.npmjs.com/package/@anthropic-ai/claude-code) also includes installation and release information.

“`

How to Install OpenClaw on DigitalOcean (Cloud VPS Setup Guide)

Running OpenClaw (formerly Clawdbot / Moltbot) on a cloud VPS instead of your personal computer is one of the smartest deployment choices you can make. A DigitalOcean Droplet gives you an always-on server with a static IP, predictable networking, and complete isolation from your personal machine — which means your AI agent runs 24/7 without tying up your laptop or risking your personal files.

DigitalOcean has become one of the most popular hosting choices for OpenClaw, and they even offer a 1-Click Deploy option from their Marketplace that handles the heavy lifting for you. This guide covers both the 1-Click approach and the manual setup, so you can choose whichever fits your comfort level.

If you’re not familiar with OpenClaw yet, start with our guide to what OpenClaw is and how it works. If you’d rather run it locally, we have installation guides for Mac and Windows.

Why Run OpenClaw on a Cloud VPS?

Running OpenClaw locally on your Mac or PC works great, but a cloud deployment solves several common pain points.

Always available. A Droplet runs 24/7 without depending on your laptop being open, plugged in, or connected to the internet. Your AI agent stays active while you sleep, travel, or close your computer.

Security isolation. Your AI agent runs on a separate machine with no access to your personal files, passwords, or accounts. If something goes wrong, your personal computer is unaffected. This is a major advantage given the security considerations around OpenClaw.

Static IP and stable networking. Unlike your home network where the IP can change and ports may be blocked, a Droplet has a fixed public IP address. This makes remote access and messaging integrations more reliable.

Scalable resources. If your agent’s workload grows — more skills, more channels, browser automation — you can vertically scale the Droplet’s CPU and RAM without starting over.

Option A: 1-Click Deploy from the DigitalOcean Marketplace

The fastest way to get OpenClaw running on DigitalOcean is their 1-Click Deploy, available in the DigitalOcean Marketplace. This provisions a security-hardened Droplet with OpenClaw pre-installed, including Docker for sandboxed execution, firewall rules, non-root user configuration, and a gateway authentication token.

Step 1: Deploy the 1-Click App

Log into your DigitalOcean account and navigate to the Marketplace. Search for “OpenClaw” and click “Create OpenClaw Droplet.” You’ll be prompted to choose a Droplet size and region.

For Droplet size, the minimum recommended is 2 vCPUs with 2GB RAM. The 1-Click image currently requires a minimum $24/month Droplet to match the snapshot’s disk and memory requirements, though DigitalOcean is working on reducing this. If budget is a concern, you can try a smaller Droplet with the manual setup method described in Option B.

Choose a datacenter region close to you for the best latency. Add your SSH key during creation — you’ll need it to log in.

Step 2: SSH Into Your Droplet

Wait for the Droplet to finish provisioning. Note that the DigitalOcean dashboard may say “ready” before SSH is actually available — if the connection fails, wait 60 seconds and try again.

ssh root@your-droplet-ip

You’ll see a welcome message from OpenClaw with setup instructions.

Step 3: Configure Your AI Provider

The welcome screen will walk you through initial configuration. You’ll need to choose your AI provider (Anthropic, OpenAI, or Gradient AI) and paste your API key. After entering the key, the OpenClaw service will restart automatically to apply the changes.

Note the Dashboard URL displayed in the welcome message — you’ll use this to access the web-based Control UI from your browser.

Step 4: Access the Dashboard

Open the Dashboard URL in your browser. The 1-Click deployment sets up Caddy as a reverse proxy with automatic TLS certificates from Let’s Encrypt — even for bare IP addresses without a domain name. This means your dashboard connection is encrypted out of the box.

You’ll need to complete the pairing process when first accessing the dashboard. Follow the on-screen instructions to pair your browser.

Step 5: Connect a Messaging Channel

From the dashboard or via SSH, connect your preferred messaging platform. The process is the same as any OpenClaw installation — create a Telegram bot via @BotFather, scan a QR code for WhatsApp, or configure Discord/Slack webhooks.

Once connected, send a test message to confirm everything is working.

Option B: Manual Setup on a Fresh Droplet

If you prefer more control over the installation, or if you want a smaller/cheaper Droplet, you can set up OpenClaw manually on a fresh Ubuntu server.

Step 1: Create a Droplet

Create a new Droplet in the DigitalOcean control panel with the following specs:

Image: Ubuntu 24.04 LTS

Size: At least 2 vCPUs and 2GB RAM (the Basic plan at ~$18/month works well). A 1GB Droplet can work with a swap file but may run out of memory during npm install.

Region: Choose the datacenter closest to you.

Authentication: Add your SSH key.

Step 2: Initial Server Setup

SSH into your new Droplet:

ssh root@your-droplet-ip

Update packages:

apt update && apt upgrade -y

Create a swap file (important for smaller Droplets to prevent out-of-memory errors during installation):

sudo fallocate -l 2G /swapfile

sudo chmod 600 /swapfile

sudo mkswap /swapfile

sudo swapon /swapfile

echo '/swapfile none swap sw 0 0' | sudo tee -a /etc/fstab

Step 3: Create a Dedicated User

Running OpenClaw as root is a bad idea. Create a dedicated user with limited permissions:

adduser openclaw

usermod -aG sudo openclaw

su - openclaw

This ensures the AI runtime doesn’t have root-level access to your system. If a skill misbehaves, the damage is contained to this user’s permissions.

Step 4: Install Node.js 22

curl -fsSL https://deb.nodesource.com/setup_22.x | sudo -E bash -

sudo apt-get install -y nodejs

Verify:

node --version

Step 5: Install and Configure OpenClaw

npm install -g openclaw@latest

openclaw onboard --install-daemon

The onboarding wizard will prompt for your model provider, API key, and messaging channel configuration — the same process as a local installation.

Step 6: Configure Firewall Rules

Set up UFW to restrict inbound access:

sudo ufw default deny incoming

sudo ufw default allow outgoing

sudo ufw allow OpenSSH

sudo ufw enable

Do not expose port 18789 (the gateway) publicly unless you have a specific reason and have set up proper authentication and a reverse proxy. For personal use, access the dashboard via SSH tunnel instead.

Step 7: Verify the Installation

openclaw doctor

openclaw status

If both return healthy results, your cloud-hosted OpenClaw is live.

VPS-Specific Configuration Tips

Running OpenClaw on a headless VPS introduces a few differences compared to a local desktop setup. Here are the most important things to get right:

Set execution host to gateway. On a VPS, there’s no terminal window open for OpenClaw to run commands in. The gateway process serves as the execution environment. Make sure tools.exec.host is set to gateway in your configuration, or commands will fail silently.

Disable execution consent prompts. On a local machine, consent prompts are a safety feature — OpenClaw asks “are you sure?” before running commands, and you approve them. On a headless VPS, there’s nobody sitting at a terminal to approve anything. Set tools.exec.ask to off or commands will hang indefinitely waiting for input. Compensate by being more careful about which skills you install and which permissions you grant.

Set security level appropriately. The tools.exec.security setting controls what OpenClaw is allowed to do. On a dedicated VPS where you control what’s installed, setting this to full is typically appropriate — without it, OpenClaw can’t call APIs, fetch URLs, or reach any external service. On a shared machine, keep it more restrictive.

Configuration lives in /opt/openclaw.env. On the 1-Click deployment, the main configuration file is at /opt/openclaw.env and is owned by root. The openclaw user intentionally cannot modify its own configuration — this is a security feature that prevents a misbehaving skill from changing API keys or redirecting outputs. Edit it as root with sudo nano /opt/openclaw.env and restart the service after changes.

Restart the service after config changes. Changes to the environment or configuration don’t take effect until you restart: sudo systemctl restart openclaw

Remote Access Options

SSH tunnel (recommended for personal use): Access the dashboard without exposing it publicly by forwarding the port through SSH: ssh -L 18789:localhost:18789 openclaw@your-droplet-ip, then open http://localhost:18789 in your browser.

Tailscale (recommended for multi-device access): Install Tailscale on both your Droplet and your devices to create a private, encrypted network. Your OpenClaw instance gets a private Tailscale address and remains inaccessible from the public internet.

Messaging only (simplest): If you’ve connected Telegram, WhatsApp, or another messaging channel, you don’t need to access the dashboard at all for day-to-day use. Just interact with your agent through chat.

Cost Expectations

Your total cost for running OpenClaw on DigitalOcean has two components:

Droplet hosting: The 1-Click deployment starts at $24/month (due to the current image size requirements). Manual setup on a smaller Droplet can start around $12-18/month with 2GB RAM. A 1GB Droplet ($6/month) can work for light use with a swap file configured.

AI model API costs: These depend on your provider and usage. Claude Haiku is the most cost-effective for everyday tasks, while Claude Opus is more capable but pricier. Typical personal use runs anywhere from $5-50/month in API costs depending on how active your agent is.

What to Do After Installation

Once your cloud-hosted OpenClaw is running, explore our guide to 10 practical things you can do with OpenClaw. Cron jobs and proactive automation are particularly well-suited to a VPS deployment since your agent is always on and always connected.

And don’t skip the security basics — read our OpenClaw security guide for best practices on running an AI agent safely, especially in a cloud environment.

Related Guides on Code Boost

What Is OpenClaw (Formerly Clawdbot)? The Self-Hosted AI Assistant Explained

How to Install OpenClaw on Windows (Step-by-Step WSL2 Guide)

How to Install OpenClaw on Mac (macOS Setup Guide)

Is OpenClaw Safe? Security Guide for Self-Hosted AI Agents

OpenClaw (formerly Clawdbot / Moltbot) gives you something powerful: an AI assistant that can read your files, run commands on your computer, access your email, manage your calendar, and communicate through your messaging apps. That power comes with real security implications that every user needs to understand before diving in.

This guide covers the known security concerns, the built-in safeguards OpenClaw provides, and the best practices you should follow to run it responsibly. Whether you’ve already installed OpenClaw on your Mac or set it up on Windows, this is essential reading.

New to OpenClaw? Start with our overview of what OpenClaw is before reading this security guide.

The Core Security Tradeoff

OpenClaw’s entire value proposition creates a natural tension with security. For an AI agent to actually do useful things — manage your inbox, organize files, run shell commands, automate browser tasks — it needs broad access to your system. The more capable you want it to be, the more permissions it needs.

This is fundamentally different from a cloud-based chatbot like ChatGPT, which runs in a sandboxed browser environment and can’t touch your local files. OpenClaw trades that isolation for capability. You get an AI that can act on your behalf, but you also get an AI that has the same access to your system as your user account.

The question isn’t whether OpenClaw is perfectly safe — no tool with this level of system access is. The question is whether you understand the risks and are taking appropriate steps to manage them.

What Security Researchers Have Found

OpenClaw’s rapid rise in popularity has attracted scrutiny from cybersecurity firms and researchers. Here’s what’s been reported:

Cisco’s AI security team tested a third-party OpenClaw skill and found it performed data exfiltration and prompt injection without user awareness. Their finding highlighted that the ClawHub skill repository lacked adequate vetting to prevent malicious submissions at the time.

Palo Alto Networks warned that OpenClaw presents a dangerous combination of risks stemming from its access to private data, exposure to untrusted content (like messages from the web or group chats), and ability to perform external communications while retaining memory. They described this as a high-risk mix for autonomous agents.

One of OpenClaw’s own maintainers publicly cautioned on Discord that if someone can’t understand how to run a command line, this is too dangerous of a project for them to use safely.

These aren’t theoretical concerns. An AI agent with shell access, internet connectivity, and persistent memory creates a real attack surface, especially when it can receive messages from external sources like group chats or unknown contacts.

OpenClaw’s Built-In Security Features

The OpenClaw project does include several security mechanisms. Understanding what they do — and what they don’t — is important.

DM Pairing System. Unknown senders who message your bot receive a pairing code that you must manually approve via the CLI before the assistant will respond. This prevents random people from controlling your agent.

Loopback Binding. By default, the gateway listens on 127.0.0.1 (localhost only), meaning it’s not exposed to your local network or the internet. Only processes on the same machine can reach it.

Gateway Authentication Token. Even local connections require a token generated during setup. This prevents unauthorized access to the admin dashboard and API.

Sandboxed Execution. Non-main sessions can run in Docker containers, isolating them from your primary system. This is configured via the sandbox setting in your agent configuration.

Execution Consent Mode. When exec.ask is set to “on” in your configuration, OpenClaw will prompt for your approval before running write operations, shell commands, or other potentially destructive actions.

Group Chat Safeguards. In group chats, OpenClaw requires an @mention to respond by default, preventing it from reacting to every message in a channel. Group commands are restricted to the owner.

Open Source and Auditable. All of OpenClaw’s code is published under the MIT license. Anyone can review it, and the developer community has been actively auditing the codebase.

Best Practices for Running OpenClaw Safely

Beyond the built-in features, here are the steps you should take to minimize risk:

Use a Dedicated Machine or User Account

The strongest recommendation from the security community is to avoid installing OpenClaw on your primary personal computer — especially one with sensitive documents, financial accounts, or credentials. Ideally, run it on a dedicated device (a Mac Mini, a Raspberry Pi, or a cloud VPS like DigitalOcean), or at minimum create a separate macOS/Linux user account with limited permissions.

Keep the Gateway Bound to Localhost